How to harness AI and save your business from the future

Generative AIs like ChatGPT, Bard and Bing are changing the world faster than we can imagine. So fast that there are now ChatGPT-like AIs that can run on smartphones. So fast that the cost of training a ChatGPT-like AI has dropped from $4.6 million in 2020 to $450k today. And it’s happening so fast that startups are seeing their business model trashed by Google and Microsoft before they can get traction. The speed of change is making people suspect OpenAI is using ChatGPT to speed up their development of new AI and features.

As a startup or a small to medium business generative AI is going to accelerate and empower you. You and your team are going to work smarter and work faster. You’re going to do more with less or grow and do much, much more than you imagine possible.

AI is going to make it possible for a lone founder to do what a medium-sized company does today, and it will allow a medium-sized company to do what right now only a big company can do.

This shift in ability to execute is making people worried that jobs are going to be lost as everyone incorporates AI into their workflows. However, as has been pointed out by many commentators, if your revenue per employee keeps going up as they complete work faster and do more using AI, why would you fire anyone?

How much of a change in productivity will we see? In a draft of a working paper titled “GPTs are GPTs: An Early Look at the Labor Market Impact Potential of Large Language Models” released on March 27, 2023 by Eloundou et al, the following estimations are made:

“Our findings reveal that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of LLMs, while approximately 19% of workers may see at least 50% of their tasks impacted. … The projected effects span all wage levels, with higher-income jobs potentially facing greater exposure to LLM capabilities and LLM-powered software. … Our analysis suggests that, with access to an LLM, about 15% of all worker tasks in the US could be completed significantly faster at the same level of quality. When incorporating software and tooling built on top of LLMs, this share increases to between 47 and 56% of all tasks.“

To help you understand how you can make the most of the changes generative AI is going to bring we’re going to start with the basics and give you a quick background on ChatGPT and how it and other generative AIs work (this includes Microsoft’s Bing and Google’s Bard).

After that we’ll go through the ways you can take advantage of AI and not be left behind.

A simple introduction to ChatGPT

To paraphrase a random internet commenter – “People would be shocked if they understood how simple the software behind ChatGPT really is”. If you’re technically minded this will show you how to build something similar to ChatGPT in 60 lines of code. It can even load the data used by GPT-2, one of ChatGPT’s predecessors.

ChatGPT was built by OpenAI. It’s a type of Large Language Model (LLM) and part of the class of AIs called “generative AI”. A language model is a computer program designed to “understand” and generate human language (thus “generative AI”). Language models take as input a bunch of text and build statistics based on that text – things like which letter is most likely to appear next to the letter “k” or which word is most likely to come before “banana” – then use those statistics to generate new text on demand.

When a language model is generating text, like in response to a question, at the most basic level it is simply looking at the text, in this case a question, and using the statistics it has generated to choose the word most likely to come next.

A Large Language Model (LLM) is just a language model trained on a large amount of text. It is estimated that the LLM that underlies the initial version ChatGPT, GPT-3, was trained on 300-400 billion words of text taken from the internet and books. That training was, basically, showing it a word from a document, like this article, and showing it the approximately 100-500 words that preceded it in the document (only OpenAI knows the actual number).

So if an LLM was fed this very article, it might be shown the word “human” and also the words “ChatGPT was built by OpenAI … and generate” that led up to it.

It turns out that when an LLM is fed nearly half a trillion words and their preceding text to build statistics with, those statistics capture quite complex and subtle features of language. That isn’t really a surprise. Human language isn’t random. It has a predictable structure, otherwise we couldn’t talk to each other.

It’s not just predictable. There is a lot of repetition. Repetition in the phrases we use, like “how’s the weather”, but also in sentence structure, “The cat sat on the mat. The bat spat on the hat.”. Even document conventions. Imagine how many website privacy policies ChatGPT would have been trained on by using the internet as a source of text.

How ChatGPT generates answers

When you ask ChatGPT a question, the words of your question become the preceding text for the next word. This preceding text is called the “context”. It’s also known as “the prompt”.

Your question, the context, is used by ChatGPT to find the most statistically probable word that would begin the answer.

Let’s say your question is “Why is the sky blue?”. First, imagine how many times that question appears on the internet and in books. ChatGPT has definitely incorporated it many times into its statistics.

“Why is the sky blue?” is a 5 word question, and forms the 5 word context. So what is the 6th word of the context going to be? It’s going to be the word most likely to appear 5 words after “why” in all the text ChatGPT has ever seen, as well as 4 words after “is” and 3 words after “the” and 2 words after “sky” and 1 word after “blue”.

(The question mark is also important, but we’re ignoring that for this simple explanation)

That word, the most probable word to fit all those conditions at the same time might be “The”. It’s a common way to start an answer. Now our context has 6 words:

“Why is the sky blue? The”

And the process is repeated:

“Why is the sky blue? The sky”

and repeated:

“Why is the sky blue? The sky is”

“Why is the sky blue? The sky is blue”

“Why is the sky blue? The sky is blue because”

The context grows one word at a time until the answer is completed. ChatGPT has learned what a complete answer looks like from all the text it has been fed (plus some extra training provided by OpenAI).

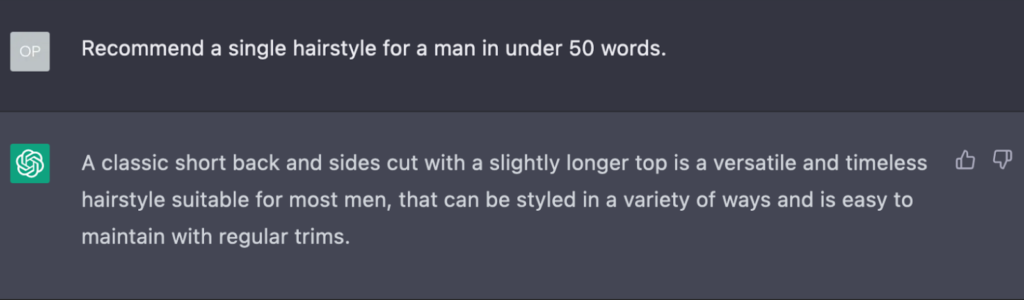

You may have heard about “prompts” and “prompt engineering”. Because every word in the context has an effect on finding the next most probable word for the answer, every word you include will act to constrain or shape the possibilities for the next word. For example, here is a prompt and answer from ChatGPT:

Add a few related words and the answer shifts in a predictable way:

This, in a nutshell, is what prompt engineering is about. You are trying to choose the best words to use to constrain ChatGPT’s output to the type of content you are interested in. Take a common prompt like this:

“Imagine you are an expert copywriter and digital marketer with extensive experience in crafting engaging and persuasive ad copy for Facebook ads. Your goal is to create captivating ad copy for promoting a specific product or service”

Don’t be fooled into thinking that there is some kind of software brain on a server in a giant data centre in the Pacific Northwest imagining it is an expert copywriter. Instead, think of all the websites run by copywriters and digital marketers and their blog articles where they discuss Facebook ads or writing engaging copy.

You can read OpenAI’s guide to prompt engineering here, and this guide to prompt engineering goes even deeper.

It is without doubt amazing that ChatGPT does what it does. It is also amazing that the process is so deceptively simple – looking at which words come before other words. But it takes nearly half a trillion words of human-to-human communication to provide the data to make it happen.

This is a simplification and leaves out important details, but what you need to know is that generative AIs like ChatGPT, Bard and Bing are always only choosing the next most likely word to add to a reply. It’s a mechanical process prone to producing false information. Added to this unavoidable feature of blind, probabilistic output, to make generative AI output more “creative” or “interesting” actual randomness is added to their choices.

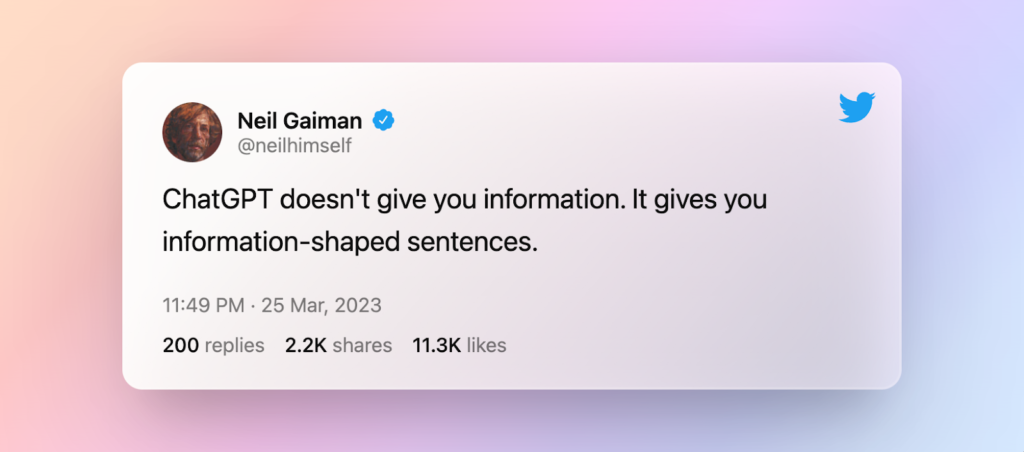

Generative AIs have been trained on enough logic and reasoning examples to mimic how we use language to communicate logic and reason. But they are, in the end, text production programs. There is no logic or reasoning as humans use it involved in producing that text. Even if the text contains a logical argument. So always review carefully what text they produce for you.

As one person who spends a lot of time producing text said:

Or is ChatGPT actually smart?

Having said all that, there is an argument that generative AIs, in particular LLMs, might be doing more than just producing text. This argument says they might be building a model of the world and the features of the world as they build their billions of statistics about text. And that these models might be what is making generative AIs so powerful.

Giving some partial support for this argument is the number of “emergent” abilities generative AI is showing. The backers of this argument say these are proof there is more going on than picking which word should come next. The emergent abilities are quite specialised. Such as naming geometric shapes. It’s not like it is teaching itself how to pilot a plane. You can find a list of the emergent abilities documented so far in this article. Be warned, they aren’t very impressive.

How to use generative AI and ChatGPT in your business

For these next sections we’re going to mostly refer to ChatGPT, but it applies to any publicly available generative AI including Google Bard and Microsoft Bing.

Use ChatGPT to work fast and smarter

ChatGPT has ingested more pro forma correspondence, business documentation, business books, corporate communications, RFPs, agreements, contracts, pitch decks, etc, than you can possibly imagine.

This makes it the ultimate tool for producing the first draft of just about any document, including replies to emails, white papers, case studies, grant proposals, RFPs, etc. It can also serve as an editor, helping to turn your rambling sketch of an email or an article introduction or anything into clear, coherent sentences and paragraphs you can further revise.

With ChatGPT you never have to delay writing an email or starting a document because you don’t know where to begin. Or because you’re completely out of your comfort zone, in over your head, or have no idea what you’re supposed to say. ChatGPT has seen it all and can help you write whatever you need to write.

Now, this does come at a slight penalty, which for most things will not matter now, and in the long run will probably be welcome: ChatGPT has a distinctive “voice” that if you’re familiar with it will be noticeable.

Also, because ChatGPT is always choosing the most probable word each and every time, what it outputs can be quite boring or cliché. Sometimes this is a good thing. Clear communication is based on convention. But don’t expect creativity or original ideas.

ChatGPT is perfect for streamlining your production of all those necessary business communications that humans use to keep things running. By adopting ChatGPT as part of your process, you will be able to execute on these faster and at a higher level, leaving you more time for the work that really moves the needle.

If you’re not sure how to command ChatGPT to produce what you need, this prompt engineering guide will help.

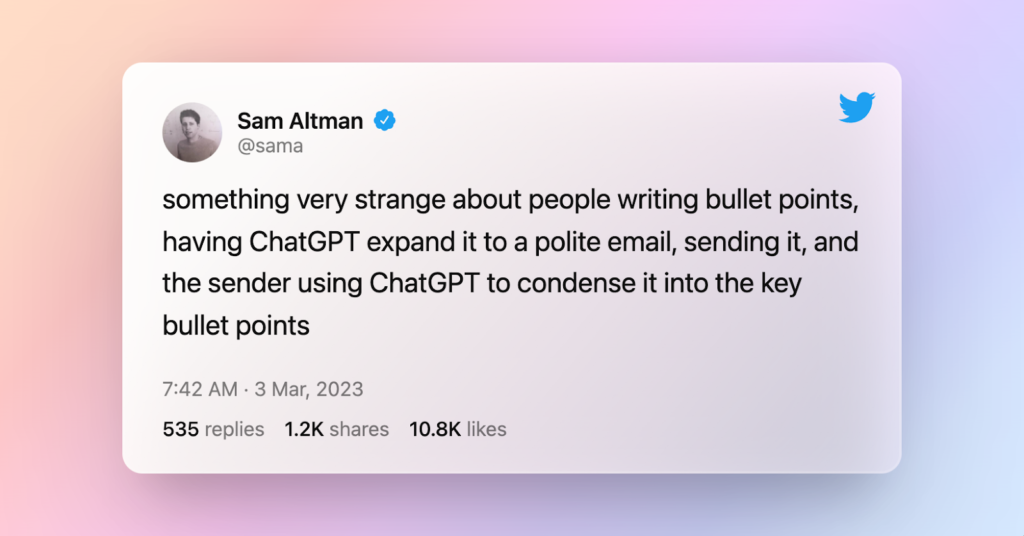

Of course everyone else you interact with will be doing the same thing. So expect the speed of business to increase and hope all your third parties prompt ChatGPT to keep their emails brief.

As Sam Altman, the CEO of OpenAI tweeted:

Use 3rd party AI tools to accelerate your business

In this section we are going to focus on data and text related tools. The AI-generated image and video space is also huge: Dall-e, Midjourney, Adobe Firefly, Stable Diffusion, etc. There are hundreds of them, but for most businesses image and video is a part of marketing rather than their core product, so we’re sticking with the most common use cases.

There are already lots of startups offering AI-powered tools of every variety. But they’re about to face the twin behemoths of Google WorkSpace and Microsoft Teams. Both have recently announced the integration of AI assistants into their offerings (Google’s, Microsoft’s).

How will these work? Imagine ChatGPT knows everything about your business. It has memorised every report, every spreadsheet, every presentation, and every email. You can ask it for numbers or summaries or ask it to create presentations or documents.

Some of these features will help reduce the time you spend on the boring necessities of keeping your business running. Others, like integration with spreadsheets, will help you find answers, create forecasts and analyse trends faster and easier than you could before. It’s even possible you can’t even do regular forecasts because your team has no-one with the expertise. That’s going to change.

Again, this is going to give you more time to spend on doing the things that really make a difference to your business – planning, strategy, talking to customers, building relationships with partners. Unless you make the mistake of burying yourself under all the reports it will be so easy to create. But you can always ask the system to summarise them for you.

Non-Google, Non-Microsoft AI tools for your business

At the time of writing there isn’t even a beta program for Microsoft and Google’s AI-powered offerings. They haven’t provided a rough date when they will be generally available. On the other hand, lots of startups are developing services based on OpenAI’s APIs, using the same LLM behind ChatGPT to create new products.

The site Super Tools has a database ]AI-based startups. You might be able to find some products in there that can help you.

If we continue to focus on text (Super Tools includes image, audio and video tools as well) these products fall into two main categories of functionality: content generation and search.

Content generation covers things like chatbots, writing assistants and coding assistants. Some of these services are nothing more than a website that adds a detailed prompt (or context) to be sent along with your own instructions/queries to ChatGPT’s backend and the response is then passed back to you.

An example of this is VenturusAI. At least it’s free. Think of these services as a lightly tailored version of the standard ChatGPT experience already provided by OpenAI. This might be obscured by design or presentation. A few hours fiddling with a prompt in OpenAI’s ChatGPT interface might get you the same result without the cost of another SAAS subscription.

If the output is short enough, and like VenturusAI they’re nice enough to show you example results, you can just paste their examples into ChatGPT and ask it to duplicate the result but for your own inputs.

Content generation with AI

Content generation is already impacting programming, legal services, not to mention copywriting of all kinds, including real estate and catalogue listings.

The impact of content generation tools is already being felt. According to Microsoft, for projects using their Github Copilot code generation assistant, 40% of the code in those projects is now AI generated. Given that code probably took a fifth or a tenth of the time it would take a normal programmer to write it, the productivity increase is enormous.

Smarter search with AI

Search is just what it sounds like, but imagine a search engine that’s smarter than Google and can respond to your search request with exactly the information you need written in a way that’s easy to understand.

Dedicated search tools are springing up based on OpenAI’s APIs. Some target specific use cases, like Elicit for searching scientific papers, others, like Libraria, are more general – upload any documents you want and it’ll index them and give you a “virtual assistant” to use as a chat interface to query them.

Build your own AI tools to power your business

There is no reason you can’t use OpenAI’s APIs yourself. They offer methods to fine-tune a model and to create embeddings. You’ll need a programmer to do this. Or, if you’re feeling brave and/or patient, you can ask ChatGPT to help you build a solution.

Fine-tuning Large Language Models

Fine-tuning uses hundreds of prompt-response pairs (which you supply) in order to train an LLM to do things like answer chat queries based on information you care about. For example, you may get the question-response pairs from transcriptions of customer service enquiries. You upload them to OpenAI. It uses them to create a new, specialised version of one of their base models that is stored and runs on their servers. Once it is built you use the API to pass chat-based enquiries to your dedicated model and get responses back in return.

If you’re clever, some of these responses contain a message signalling that a human is needed to deal with the enquiry and your chat system can make that happen.

Embeddings for semantic search

Embeddings are used in querying across large amounts of text. Imagine asking normal language questions about information hidden in your company’s folder of reports and getting back answers.

For example, if you have hundreds of PDFs with details about the different models of widget you produce, you can create embeddings for all the documents, and then you will be able to ask things like “Which widget is best for sub-zero environments?” or “Which widget is green and 2 metres long?”. Even better, your customers will be able to ask those questions themselves.

These next few paragraphs give a basic information on embeddings and how they are used. You might want to skip it the first time through.

Embeddings are basically a list of numbers. An embedding can be thought of as an address in “idea space”. Instead of having 4 dimensions like the space we live in, this “idea space” has over 1500. Every word or chunk of text can have a unique embedding generated for it by the LLM. Texts that are conceptually close together will have embeddings that are close together (based on a distance algorithm that works for 1500 dimensions).

For example, the embeddings for “apple” and “orange” will be close together because they are both fruit. But the embeddings for “mandarin” and “orange” will be even closer together because they are both citrus fruits.

Surprisingly, this also works when you get the embeddings for hundreds of words of text.

Once you have embeddings for every chunk in every document you want to search stored in a database that can do those multi-dimensional distance calculations, like pinecone, or FAISS, you’re ready to do the actual search.

This is a bit clever. To do the search, you take the user’s query, and with a selection of pieces of your documents you send it to your LLM, like ChatGPT, to generate an answer that is probably not completely correct but is close.

Then you get the embedding for that answer and use that to search your database for the chunks whose embeddings are closest in distance to it. You can then either present the pieces of document to your user, or send the question and those chunks (limited to the size of the allowed context), to ChatGPT for it to generate a properly structured answer.

This article goes into more details and strategies on using embeddings.

If you’re more technically minded, you can connect ChatGPT to any number of tools using a library called Langchain. It cleverly uses prompts to direct ChatGPT to output calls to external services, like calculators, databases, web searches, etc, and Langchain handles call the service, collecting the results and then adding them to the current conversation with ChatGPT.

This pre-dates and is similar to OpenAI’s plugins, but it’s more versatile and can be tailored to your specific needs.

Try and use agents to do your work for you

Using LLMs to create autonomous agents has grabbed a lot of recent attention. By integrating a generative AI like ChatGPT into a system that can do things like search the web, run commands in a terminal, post to social media and other actions that can be driven by software, you can build a system that can make simple plans and execute them. Kind of.

These systems, like AutoGPT and BabyAGI work by using a special prompt (that you can see an example of here) that tells ChatGPT what it is to do and includes a list of external commands it can call. It also tells it to only output information in JSON format instead of human readable text – so it can be easily read by other programs.

AutoGPT feeds the prompt to ChatGPT and collects its response in JSON format. It executes any commands it finds in the response and incorporates the results from those to create the next prompt to feed to ChatGPT.

It uses ChatGPT’s context, which is about 3000 words, to create a short term memory for ChatGPT that can hold the goal you’ve given it, the remaining steps in its “plan” and any intermediate results it needs. This makes it somewhat capable of devising and executing short plans. We use the word “somewhat” because it needs constant monitoring and errors can cause it to go off track.

The short term memory can be extended using embeddings as we discussed above. And there is an active community working hard to make the LLM agents more robust and more capable. But for now, you may be able to use an agent to automate a simple workflow. It is particularly good at compiling and summarising information from the internet. Just be sure to do lots of testing and don’t make it an essential part of your infrastructure.

Use no-code/low code to build inhouse AI tools

Because the OpenAI API returns JSON for your requests, you can access it from all the best no-code/low code app builders which support third party APIs.

If you don’t need apps but just want to integrate generative AI into your workflows, Zapier and Make now support OpenAI in their integrations. You can use it to automatically draft emails or generate customer service tickets based on incoming emails. Anywhere in your workflows where a human has been needed to make a basic planning or routing decision is a candidate for being automated now.

Build your own generative AI startup

Using the same tools and techniques you would use to build inhouse AI tools you can build a product.

But that’s just the first level. Beyond leveraging OpenAI’s APIs you can use services like Cerebras to build and train your own model.

Training your own model might be out of reach, but fine-tuning an existing model might be all you need. Fine-tuning a model for a specific domain already has a name – “models as a service”. These models can help users in a particular domain do everything from fix their spelling to estimate the cost of repairs to design new molecules. Of course your use case will dictate the model you fine-tune which will impact your costs.

The power of having your own fine-tuned model that users interact with is that once you have users you now have a source for even more training data, creating an ongoing cycle of fine-tuning and model performance improvement that can build a moat for you in your market.

Go forth and generate

We hope this article has given you the understanding, inspiration and links that you need to get started using generative AI in your business.

Start small, use ChatGPT to draft a few emails (but double check them). Browse Super Tools and see if there are any tools on there that might address one of your workflow or business process pain points.

Small steps and strategic integration of generative AI is key to taking advantage of this huge technological leap forward. Start today and see how fast and far it can take you.